Lambda Provisioned Concurrency - Improving AWS Lambda Cold Start Times

AWS Lambda is an awesome service for building serverless applications that are scalable and easy to maintain by default.

But of course, there is no free lunch in IT.

One of the main drawbacks of AWS Lambda is cold starts.

This article explains what cold starts are, why they occur, and how to get rid of them with Provisioned Concurrency.

Cold Starts

What are Cold Starts?

When working with serverless solutions such as AWS Lambda, there is no server that runs 24/7.

When you send a request to your lambda a few things need to happen first:

- The environment needs to be provisioned

- The environment needs to be initialized

Cold starts refer to the time the lambda needs for both steps until the lambda runs your handler code, i.e. your business logic.

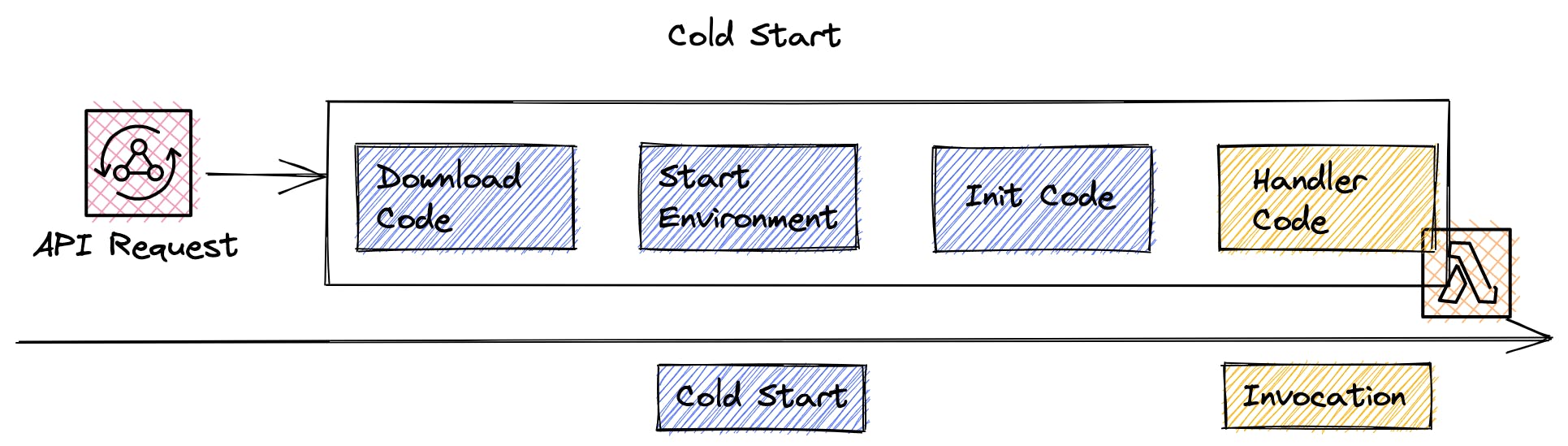

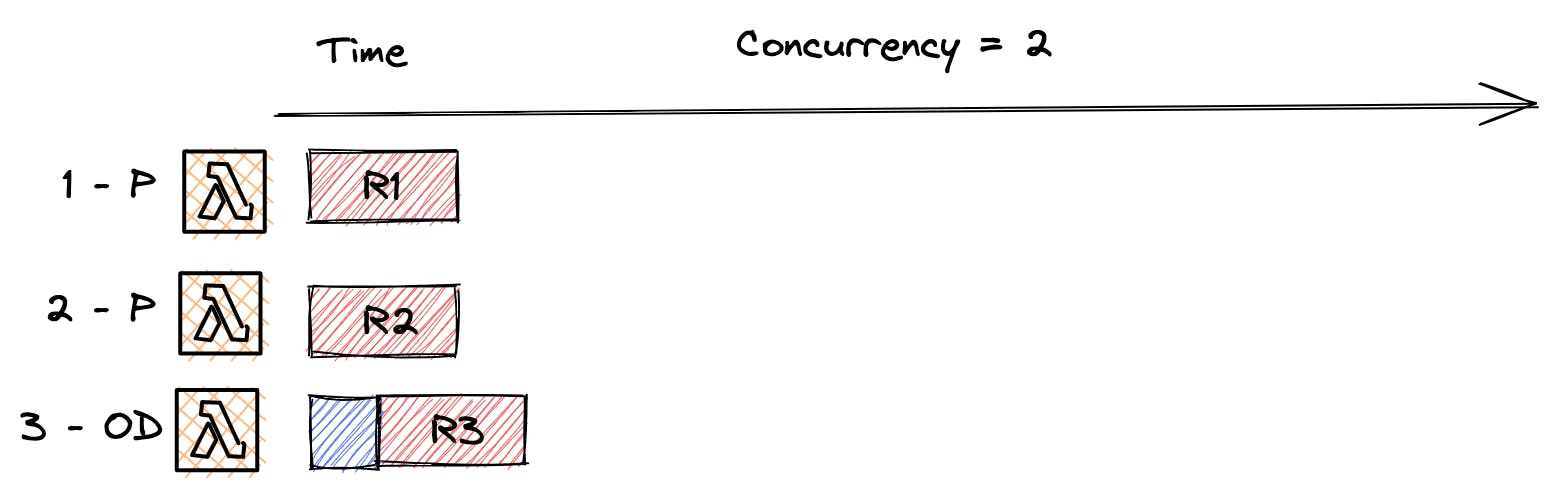

Here you can see the steps it takes if a lambda first needs to start.

The steps are:

- Download code (from S3 or ECR)

- Start execution environment (internal container)

- Execute initialization code (outside of handler code)

- Execute handler code (actual lambda code)

Everything in blue is referred to as a cold start.

You are not charged for cold start times.

But of course, it adds latency to your response time.

Everything in orange is your actual invocation time.

This time will be charged.

What is really important to note here is the fact that the initialization code also only runs once for each new environment.

That means if you have the same requirements, connections, or imports for each lambda function, put them outside of the handler function and the response time can be decreased dramatically.

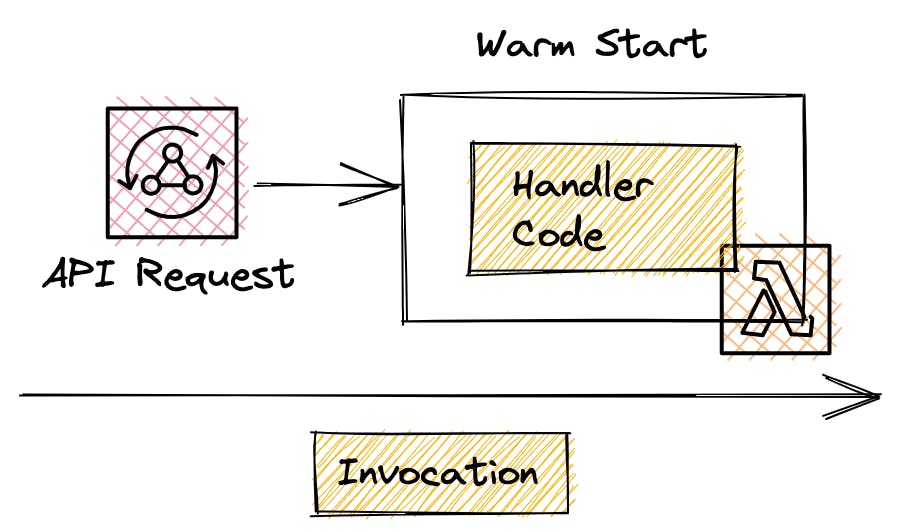

After a cold start, the execution environment will not be destroyed immediately. If more requests are coming in in a short time, which is not known, the same environment will be used.

These environments are now referred to as warm starts. Now just the handler code will be executed.

When do Cold Starts happen?

There are different cases when cold starts happen. The main ones are:

Lambda was not used for a specific time

After a specific, non-deterministic time the lambda execution environment will be deleted if no request came in. Unfortunately, you cannot configure this time nor do you know how long it can be.

Lambda needs to scale-out

Lambda is great for scaling purposes. In case it needs to scale out a new environment will be initialized and another cold start will happen.

You don't know exactly when it needs to scale so your application needs to be able to handle these cold starts.

Code was changed

If you change your lambda code and redeploy all execution environments will be destroyed and re-provisioned.

So cold starts will happen again.

Overcoming Cold Starts

Now that we know what cold starts are we can ask ourselves questions on how to solve the problem of cold starts.

Basically, there are two approaches to overcome the cold start in general.

These are:

- Function warmers

- Provisioned Concurrency

Function Warmers

I've described that the execution environment will not be destroyed for a certain time.

Therefore, if we just call our lambda often enough the environment should always be warm, right?

Not exactly.

Like we saw in the cases when cold starts are happening there are a few cases that are not deterministic, so we do not know when they are happening.

For example, if the lambda decides to scale up or if the lambda decides to refreshen the environment.

If you have a production workload with ongoing traffic you wouldn't need a function warmer also because your function is invocated all the time.

Provisioned Concurrency

This article is about provisioned concurrency so let's talk about that. Provisioned concurrency is eliminating cold starts for requests which go to the provisioned lambdas.

The idea behind provisioned concurrency is that a number of lambda environments are always ready and set to go.

So each lambda you define as provisioned is always warm. This comes of course with a higher price because AWS needs to provision certain environments and keep them on all the time.

It is not really an on-demand option.

The actual price for the invocation time however is a bit lower compared to on-demand lambdas. But since the lambda is running 24/7 you need to pay for that.

What you need to carefully plan is the number of provisioned environments. For example, if you set provisioned concurrency to 5 you will always have 5 lambdas ready for requests.

The cold start duration is eliminated because the lambdas are provisioned from the beginning. But they will just be eliminated for these 5 lambdas and not more.

Let's take a look at the invocation patterns to understand them better.

Invocation Patterns

Internally lambda is putting all messages it gets into a queue.

If you send 3 messages, all messages will be added to a queue, and then be assigned to the lambdas functions.

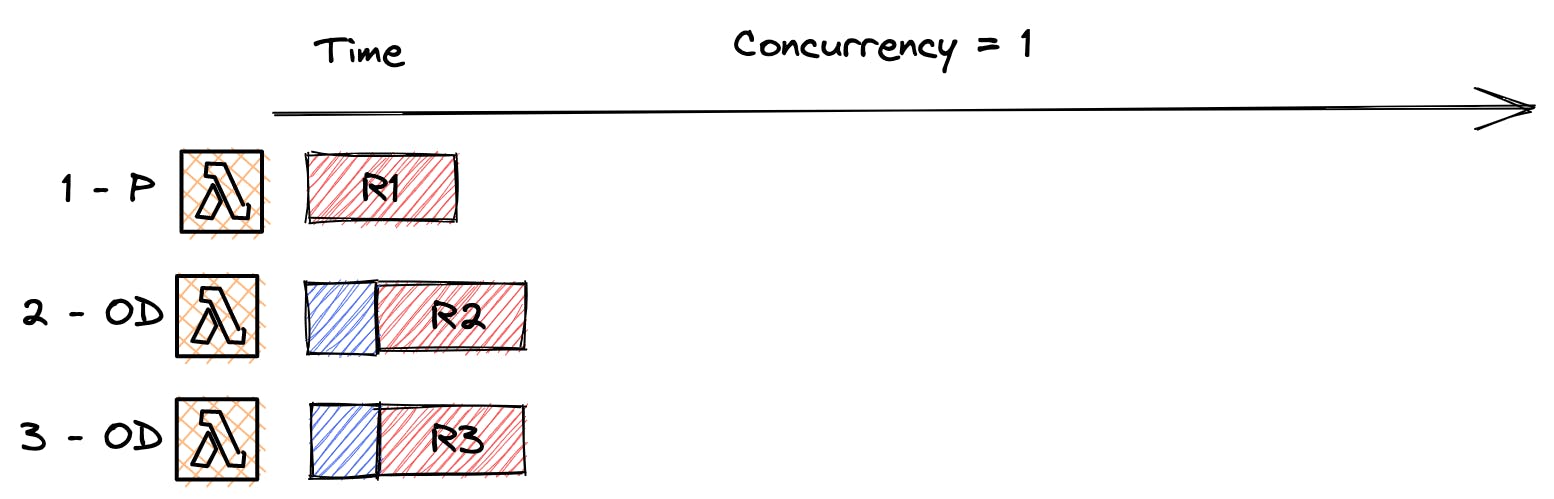

We are talking about provisioned concurrency and not reserved concurrency. I'll explain reserved concurrency in a bit. In provisioned concurrency, we've got the case that if we have more requests at the same time than warm lambdas, lambda will simply spin up a new on-demand lambda and that request will experience a cold start.

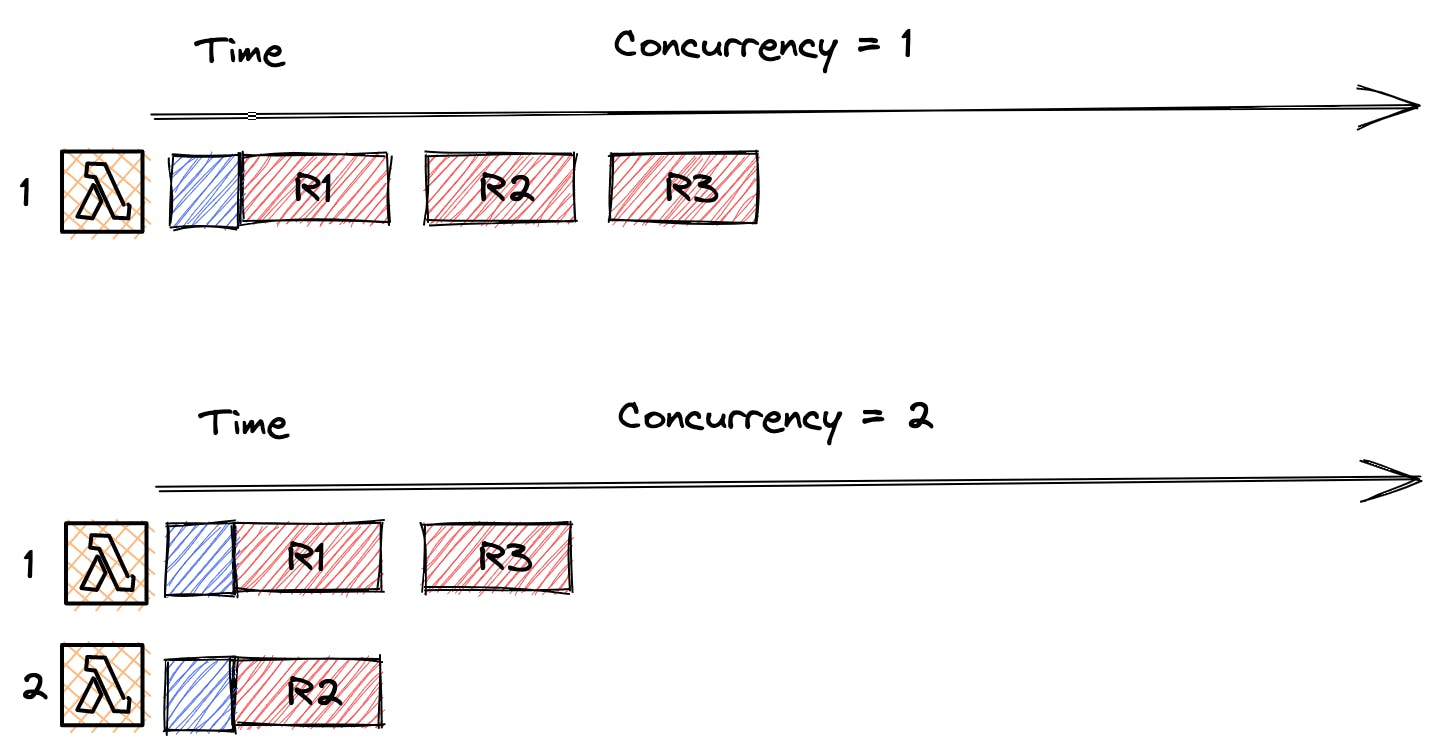

Let's have a look at two examples. Here are two cases with a provisioned concurrency of one and a provisioned concurrency of two.

With concurrency one, what happens is that the first request gets executed without a cold start and the other two will experience cold starts in an on-demand lambda.

With concurrency two the first two requests won't experience a cold start but the third one will.

Provision concurrency adds some complexity in planning your whole architecture and how many messages and invocations you will have in your data flow but you will get rid of cold starts completely.

Reserved Concurrency

One thing which gets confused a lot is reserved concurrency. Reserved concurrency is a setup to stop your lambda from scaling out.

This does not help us with cold starts here. In case we would activate reserved concurrency also for one and two the request time would look like that:

You can see that the lambda with reserved concurrency of one is just executing the messages one-by-one and the first one would have a cold start. Similarly with reserved concurrency of two.

Differences On-Demand Lambdas vs. Provisioned Concurrency

There are a couple of differences to note when thinking about provisioned concurrency to on-demand lambdas.

1. Initialization doesn't need to be optimized

Like we saw, with provisioned concurrency no cold start will happen. That means we do not need to optimize the initialization code.

The code will just be run once and after that, the environment will be ready.

This is especially important for compiled languages such as Java and C# since they have really long cold start times.

2. Initialization code is run more often than the total number of invocations

The initialization code will automatically be run multiple times to ensure high availability.

The code runs in different availability zones and as load balancing and renewing of environments happen the initialization code will be executed several times, even when no invocation happens.

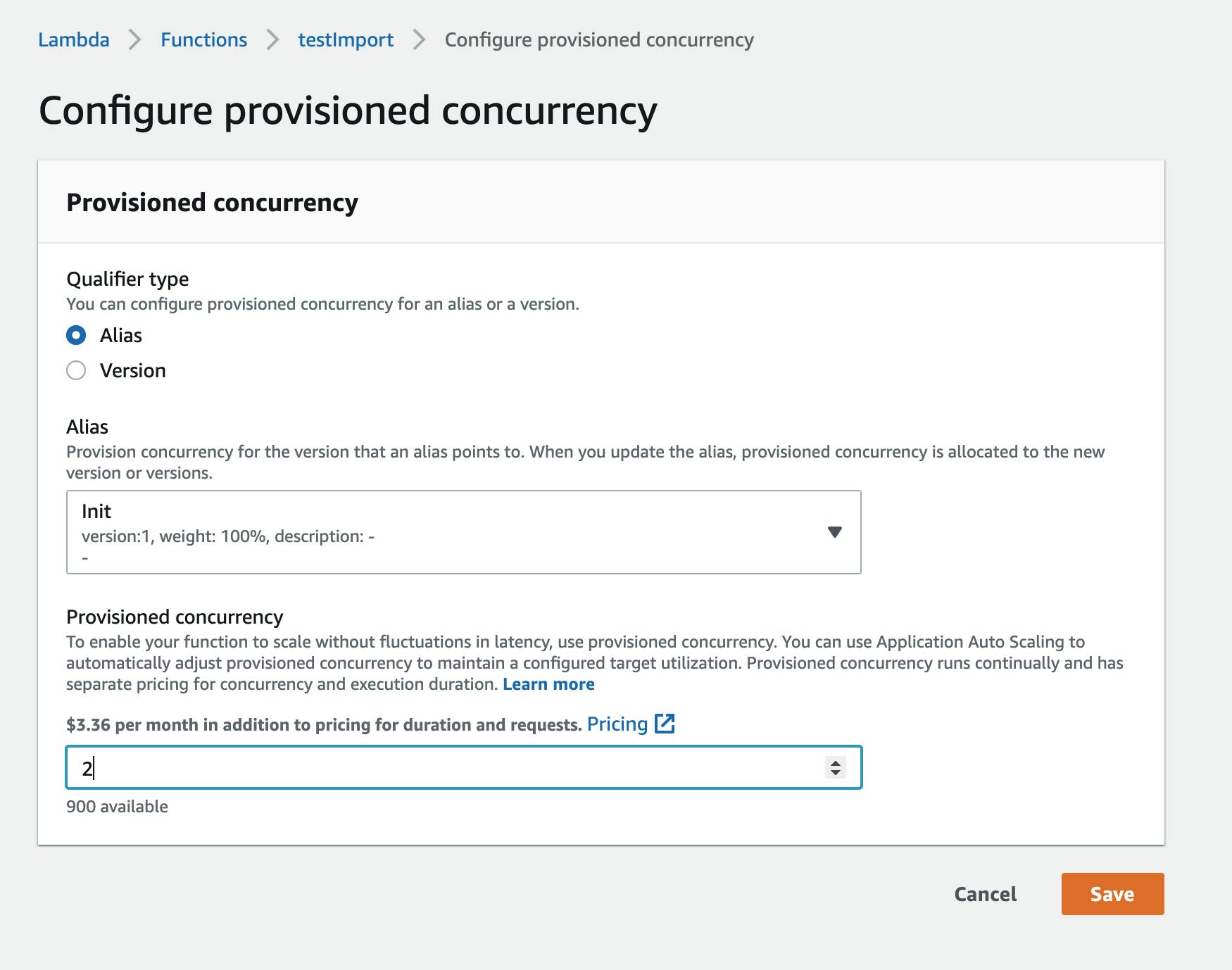

3. Provisioned Concurrency cannot use the LATEST tag

The LATEST tag cannot be used with provisioned concurrency. You always need to deploy an ALIAS or a VERSION for the lambda.

Activating Provisioned Concurrency

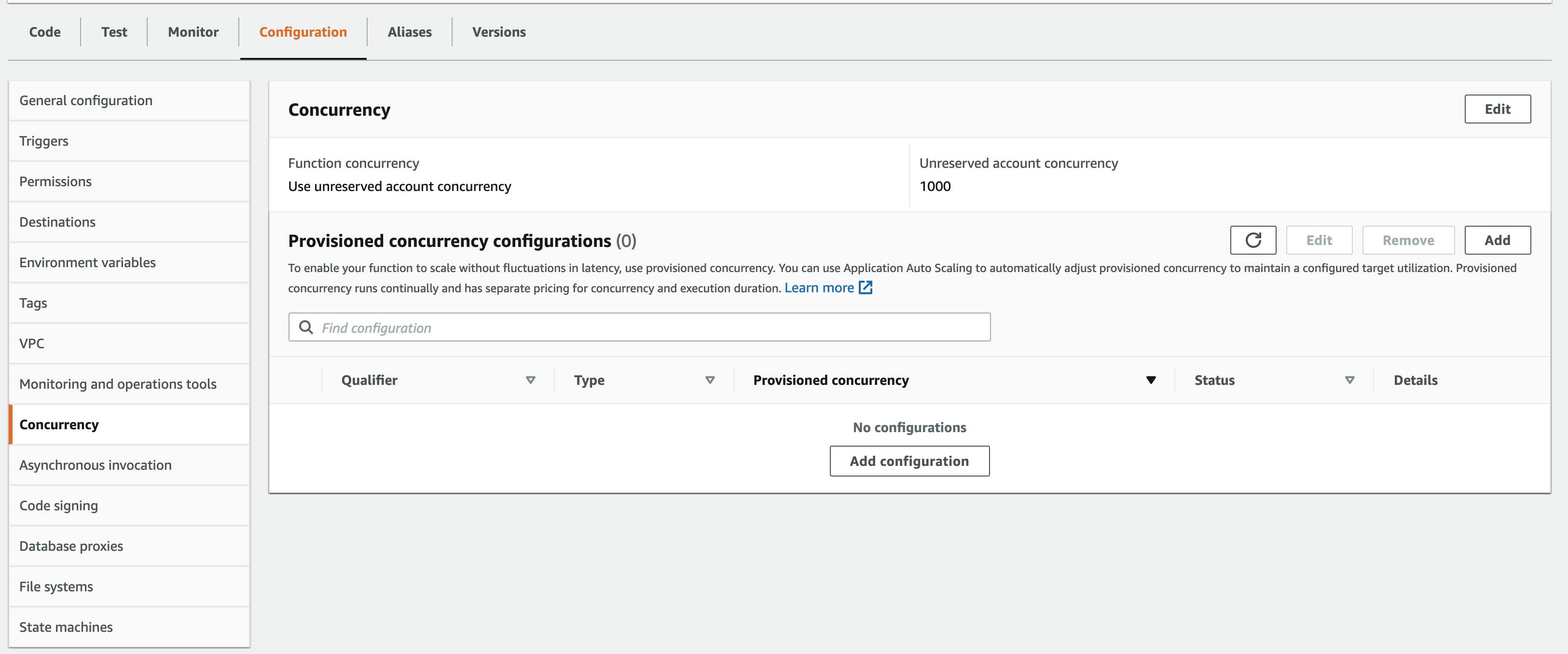

You can activate provisioned concurrency in your lambda configuration settings.

Head to your lambda -> Configuration -> Concurrency

Here you can create a new configuration.

As mentioned above you need to have an alias or a lambda version deployed to choose from.

You can set the number of lambdas that need to be ready here as well. You can also adjust that number with Autoscaling rules.

Check the pricing before because provisioned concurrency lambdas can get quite expensive.

Summary

That's it 🎉

This article should give you an overview of cold starts in general, when they occur, and how to overcome them by using provisioned concurrency.

Important to notice here is that this is one approach to overcoming cold starts, there are many more opportunities to optimize your lambdas without provisioned concurrency.

As always in computer science, don't optimize before you need it. In production workloads, AWS says that just 1% of the lambdas experience cold starts.

If you have an ongoing workload and use interpreted languages cold starts are often not a problem.

Otherwise, if you have really long-running services and provision concurrency is too expensive you can also think about using container services such as Fargate to spend less money.

They are not completely serverless anymore but are still a good approach to overcome these issues and reduce costs.

If you want to know more about Serverless, AWS, and building SaaS products on AWS follow me on Twitter for more updates

Resources

The series Operating Lambda Series is an awesome resource for a deep dive into lambda performance optimizations.